Effective PagerDuty Incident Response in Slack

Webhooks allow you to receive HTTP callbacks when incidents are triggered and updated. Details about the event are sent to your specified URL, such as Slack or your own custom PagerDuty webhook processor. Start Work on PagerDuty Incidents. The Slack channel or thread will be created with the tool type and incident number in the name to make it easy to find.

The PagerDuty Analytics Slack integration brings key Insights into Slack, providing quick and easy access to important metrics where you work. Slack Insights subscriptions allow you to evaluate metrics on a recurring basis, making it easier to identify trends and changes. Configure a Slack Insights. Integrate PagerDuty and Slack the way you want. Send instant messages to users or channels in Slack. Connect PagerDuty and Slack with your other cloud apps and run workflows.

SlackOps for PagerDuty

View all blogs in this series here.

Slack has been taking the business communications industry by storm. This is also true for collaboration and coordination of incidents. Every day more and more enterprises are discussing, diagnosing, and solving incidents through Slack. While Slack is an amazing platform for collaboration, it is not an incident response platform, nor well suited to driving effective process. To deliver an effective PagerDuty Incident Response in Slack you need help and RigD is the solution!

In this series we have highlighted the key elements of an effective incident response process based on industry best practices, including PagerDuty’s PagerDuty’s incident response documentation. Each part in the series will discuss the purpose and value of each of these elements, show how to implement it in Slack using RigD, and give you a sense of the time and cost impact. Let’s take a quick look at the topics covered.

Part 1- How to Open PagerDuty Incidents from Slack

Opening an incident in Slack can save a good amount of time, but more importantly can significantly reduce the complexity as compared and training effort to opening an incident through the PagerDuty web UI. If you are opening a lot of manual incidents at your organization this is a must read.

Part 2 – PagerDuty Who is On Call from Slack in under 10 seconds

Getting to the right person fast saves a tremendous amount of wasted time in incident response. Identifying who is on call and connecting with them is at the core of what PagerDuty does, and tracking it down in Slack can save even more time in Slack.

Part 3 – PagerDuty Slack Integration: Automatically Open a Slack Channel for Incidents

You wouldn’t be reading this blog if you were not already using Slack to collaborate on incidents. However, there is a substantial benefit to utilizing a dedicated Slack channel per incident or at the lease a Slack Thread. This part will show you how to make that easy.

Part 4 – PagerDuty Incident Status in Slack

Incident updates are a key part of keeping everyone aligned, both internally and externally. A timely external update can be the difference between keeping and loosing a customer. Never miss an update by driving them through Slack.

Part 5 – Take Command of your PagerDuty Incident Response

Technical incident response has been modeled after military and emergency services incident response. Identifying who is in command and executing an established triage are crucial for both. They can be done with easy using RigD in Slack.

Part 6 – Automate PagerDuty Incident Postmortems to Drive Improvement

The final and most critical element of the incident response is postmortems. Utilize Slack and RigD to ensure you never miss a postmortem and drive a better process.

The Impact of an Effective PagerDuty Incident Response

In each part we take a look at the time and cost savings that can be realized from running an effective PagerDuty incident response process in Slack. To demonstrate the value we leverage the data found in the Rand Group report, $5,600 cost per minute of downtime for an enterprise, and from the PagerDuty ROI study which computed an average of 20,483 incidents and 14 outages across the enterprises included in the study. Each of the elements discussed provides meaningful savings, but in aggregate it’s truly impressive. At 2,162 hours of savings using RigD is like adding an extra team member. Reducing the outage costs related to these by 80% is sure to get everyone looking at the bottom line excited. Most importantly though your customers will appreciate that they can get on with their business faster. Even if your operational work load is only a fraction of these enterprise benchmarks the benefits still demand you take a look at how to RigD can transform your operations.

Schedule a Demo and meeting with the Crew to learn more.

Learn more about RigD here, and give our Slack App a try.

Information on what to do during a major incident. See our severity level descriptions for what constitutes a major incident.

Documentation

For your own internal documentation, you should make sure that this page has all of the necessary information prominently displayed. Such as: phone bridge numbers, Slack rooms, important chat commands, etc. Here is an example,

| #incident-chat | https://a-voip-provider.com/incident-call | +1 555 BIG FIRE (+1 555 244 3473) / PIN: 123456 |

Need an IC? Do !ic page in Slack | ||

| For executive summary updates only, join #executive-summary-updates. | ||

Security Incident?

If this is a security incident, you should follow the Security Incident Response process.

Don't Panic!#

Join the incident call and chat (see links above).

- Anyone is free to join the call or chat to observe and follow along with the incident.

- If you wish to participate however, you should join both. If you can't join the call for some reason, you should have a dedicated proxy for the call. Disjointed discussions in the chat room are ultimately distracting.

Follow along with the call/chat and add any comments you feel are appropriate, but keep the discussion relevant to the problem at hand.

- If you are not an SME, try to filter any discussion through the primary SME for your service. Too many people discussing at once can become overwhelming, so we should try to maintain a hierarchical structure to the call if possible.

Follow instructions from the Incident Commander.

- Is there no IC on the call?

- Manually page them via Slack, with

!ic pagein Slack. This will page the primary and backup IC's at the same time. - Never hesitate to page the IC. It's much better to have them and not need them than the other way around.

- Manually page them via Slack, with

- Is there no IC on the call?

Steps for Incident Commander#

Resolve the incident as quickly and as safely as possible, use the Deputy to assist you. Delegate any tasks to relevant experts at your discretion.

Announce on the call and in Slack that you are the incident commander, who you have designated as deputy (usually the backup IC), and scribe.

Identify if there is an obvious cause to the incident (recent deployment, spike in traffic, etc.), delegate investigation to relevant experts,

- Use the service experts on the call to assist in the analysis. They should be able to quickly provide confirmation of the cause, but not always. It's the call of the IC on how to proceed in cases where the cause is not positively known. Confer with service owners and use their knowledge to help you.

Identify investigation & repair actions (roll back, rate-limit services, etc) and delegate actions to relevant service experts. Typically something like this (obviously not an exhaustive list),

- Bad Deployment: Roll it back.

- Web Application Stuck/Crashed: Do a rolling restart.

- Event Flood: Validate automatic throttling is sufficient, adjust manually if not.

- Data Center Outage: Validate automation has removed bad data center. Force it to do so if not.

- Degraded Service Behavior without load: Gather forensic data (heap dumps, etc), and consider doing a rolling restart.

Listen for prompts from your Deputy regarding severity escalations, decide whether we need to announce publicly, and instruct Customer Liaison accordingly.

- Announcing publicly is at your discretion as IC. If you are unsure, then announce publicly ('If in doubt, tweet it out').

Keep track of your span of control. If the response starts to become larger, or the incident increases in complexity, consider splitting off sub-teams in order to get a more effective response.

Once the incident has recovered or is actively recovering, you can announce that the incident is over and that the call is ending. This usually indicates there's no more productive work to be done for the incident right now.

- Move the remaining, non-time-critical discussion to Slack.

- Follow up to ensure the customer liaison wraps up the incident publicly.

- Identify any post-incident clean-up work.

- You may need to perform debriefing/analysis of the underlying contributing factor.

Once the call is over, you can start to follow the steps from After an Incident.

Steps for Deputy#

You are there to support the IC in whatever they need.

Monitor the status of the incident, and notify the IC if/when the incident escalates in severity level.

Follow instructions from the Incident Commander.

Once the call is over, you can start to follow the steps from After an Incident.

Steps for Scribe#

You are there to document the key information from the incident in Slack.

Update the Slack room with who the IC is, who the Deputy is, and that you're the scribe (if not already done).

- e.g. 'IC: Bob Boberson, Deputy: Deputy Deputyson, Scribe: Writer McWriterson'

Start our status monitoring bot so that all responders can see the current state without needing to ask.

- OfficerURL can help you to monitor the status on Slack,

!status- Will tell you the current status.!status stalk- Will continually monitor the status and report it to the room every 30s.

- OfficerURL can help you to monitor the status on Slack,

You should add notes to Slack when significant actions are taken, or findings are determined. You don't need to wait for the IC to direct this - use your own judgment.

- You should also add

TODOnotes to the Slack room that indicate follow-ups slated for later.

- You should also add

Follow instructions from the Incident Commander.

Once the call is over, you can start to follow the steps from After an Incident.

Steps for Subject Matter Experts#

You are there to support the Incident Commander in identifying the cause of the incident, suggesting and evaluation repair actions, and following through on the repair actions.

Pagerduty Slack Integration

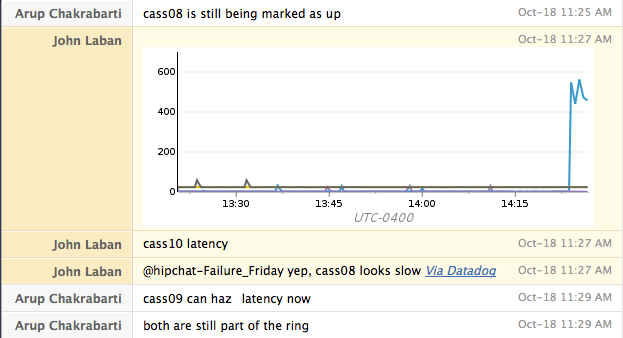

Investigate the incident by analyzing any graphs or logs at your disposal. Announce all findings to the incident commander.

- If you are unsure of the cause that's fine. Simply state that you are investigating and provide regular updates to the IC.

Announce all suggestions for resolution to the Incident Commander, it is their decision on how to proceed, do not follow any actions unless told to do so!

Follow instructions from the Incident Commander.

Once the call is over, you can start to follow the steps from After an Incident.

Pagerduty Slack App

Steps for Customer Liaison#

Be on stand-by to post public-facing messages regarding the incident.

You will typically be required to update the status page and to send tweets from our various accounts at certain times during the call.

Follow instructions from the Incident Commander.

Once the call is over, you can start to follow the steps from After an Incident.

Steps for Internal Liaison#

What Is Slack

You are there to provide updates to internal stakeholders and to mobilize additional internal responders as necessary.

Pagerduty Slack Integration Who Is On Call

Be prepared to page other people as directed by the Incident Commander.

Notify internal stakeholders as necessary, adding subscribers to the PagerDuty incident. We have predefined teams called 'SEV-1 Stakeholders' and 'SEV-2 Stakeholders' which can be used.

Provide regular status updates in Slack (roughly every 30mins) to the executive team, giving an executive summary of the current status. Keep it short and to the point, and use

@here.Follow instructions from the Incident Commander.

Once the call is over, you can start to follow the steps from After an Incident.